The Hidden Costs of Unstructured AI Prompting

That "quick question" to ChatGPT is quietly bleeding your business dry. Here's the math.

I've sat through 700+ co-building sessions watching business owners interact with AI. And I've noticed something that makes me wince every single time: the ping-pong.

You know the pattern. Vague prompt. Generic response. Clarification. Slightly better response. More clarification. Marginally improved response. Frustration. Start over.

Most people treat this like a minor annoyance. Background noise. Just how AI works.

It's not. A 2025 MIT Sloan study found that 50% of performance improvements when using advanced AI systems came from users adapting their prompting behavior. Not the AI getting smarter. Not better models. Half of the productivity gains came from how people asked questions.

Which means half of what you're paying for—in time, in tokens, in cognitive overhead—depends entirely on a skill nobody taught you.

The Ping-Pong Tax

Here's something most people don't realize about how AI conversations actually work: every message you send includes the entire conversation history. That clarifying follow-up? It's not a quick addition. It's re-sending everything you've already discussed, plus your new question.

This is why Sam Altman dropped a jaw-dropping number at Stripe Sessions 2024: OpenAI estimates that polite but verbose prompting adds roughly $30 million monthly in compute costs across their user base. Thirty. Million. Dollars. A month.

Now, most of that isn't your problem directly. You're not paying OpenAI's server bills. But you are paying something more valuable: your time and your focus.

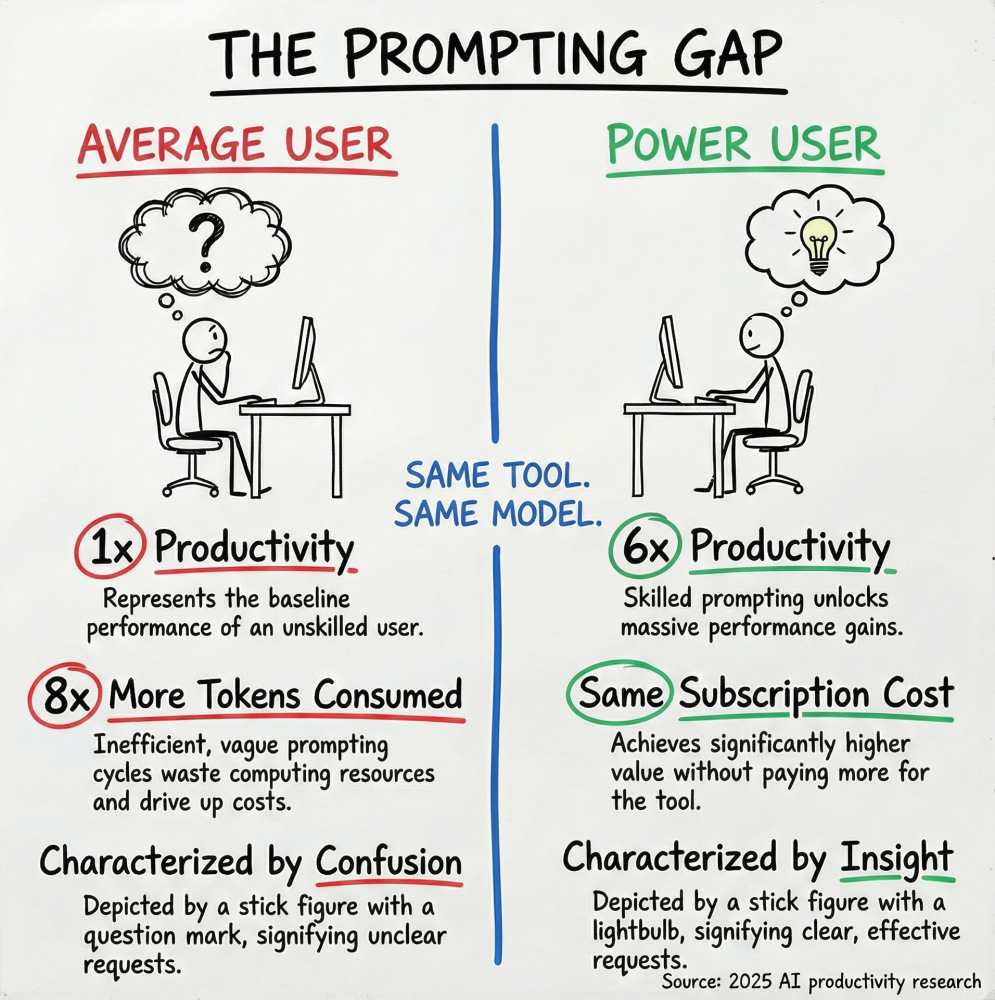

The research shows a 6x productivity gap between power users and average users. Six times. Same tool. Same subscription. Same model. One person gets six times the value because they know how to structure a prompt.

And here's the kicker—the inefficient prompters consume up to 8x more computing credits for minimal benefit. They're working harder to get less.

Why Your Brain Lies to You

If poor prompting were obviously painful, people would fix it. The problem is subtler than that.

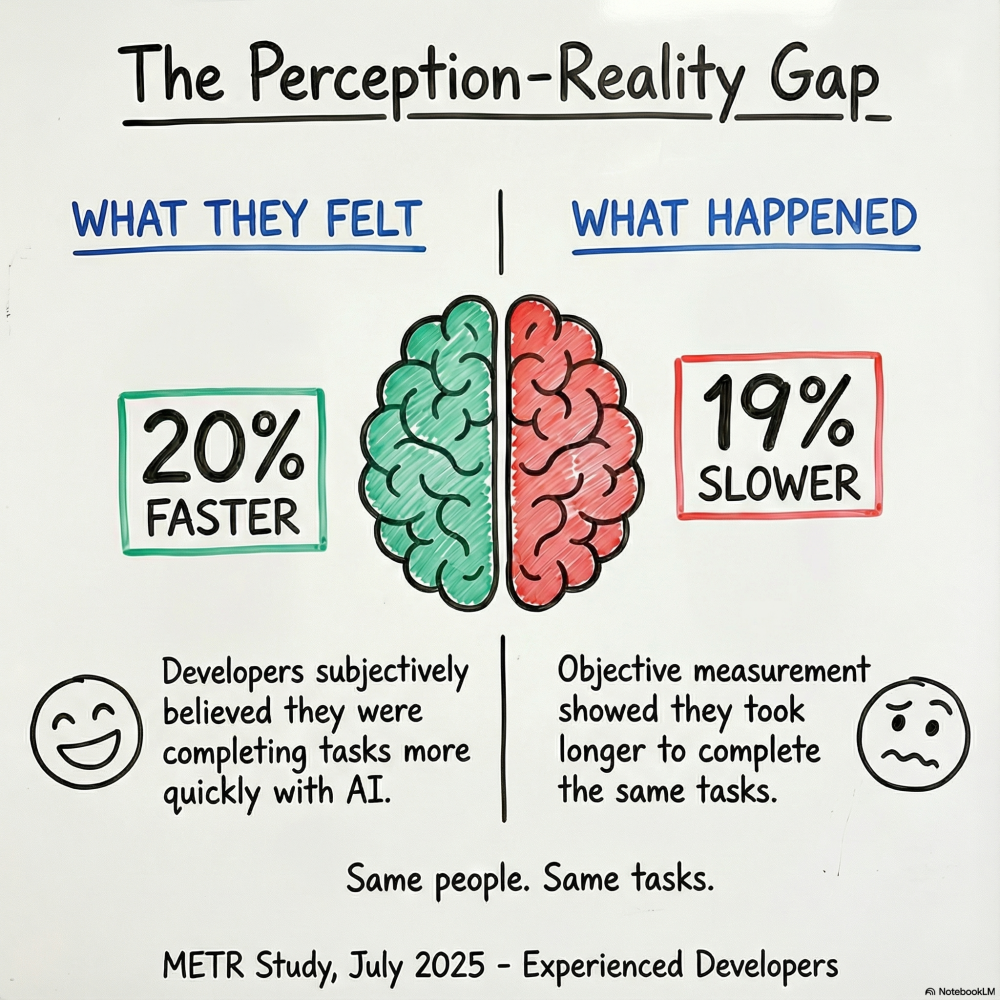

METR ran a randomized controlled trial in July 2025 with experienced developers. Using AI tools caused a 19% slowdown in actual task completion. The same developers believed they were 20% faster.

Read that again. Objectively slower. Subjectively convinced they were speeding up.

This perception-reality gap is why the problem persists. The inefficiency doesn't feel bad enough to trigger change. You think you're saving time because, well, AI is supposed to save time. That's the whole point. So your brain fills in the expected story even when reality tells a different one.

I've watched this happen in real time. A client spends 45 minutes going back and forth with Claude to get a draft email. They could have written the email themselves in 15 minutes. But they walk away feeling productive because they "used AI."

The tool isn't broken. The interaction pattern is.

The Three Prompting Sins

After watching hundreds of people interact with AI, I've identified three patterns that consistently murder efficiency:

The Search Engine Mistake. Most people treat ChatGPT like it's Google with better grammar. It's not. Google shows you links. AI thinks with you—but only if you engage it like a thinking partner. Asking "what's a good email subject line" gets you generic garbage. Explaining your audience, your offer, your previous results, and your constraints gets you something you can actually use.

The Context Starvation Problem. "Write a customer service email saying sorry for late shipping." That's a prompt I see constantly. And it produces perfectly useless output. Compare it to: "You're a customer care specialist for a boutique electronics retailer. A customer ordered a laptop on March 1st. It's arriving March 10th because of a supplier delay. Write a friendly apology under 200 words, offering a 10% discount on their next purchase." Same task. Wildly different results. The difference is context, not magic.

The Format Gamble. When you don't specify what you want—bullets versus paragraphs, formal versus casual, 100 words versus 500—you're leaving critical decisions to chance. Harvard Business Review documented what they call "workslop": 41% of workers have encountered AI-generated low-quality output, with each instance costing nearly 2 hours of rework. Two hours. Per incident. Because someone didn't spend 30 seconds specifying the output format.

The Cognitive Debt Nobody Talks About

Here's where it gets weird. And a little uncomfortable.

MIT Media Lab tracked users over four months with EEG monitoring. ChatGPT users displayed progressively lower executive control and attentional engagement over time. By the third session, users had delegated almost all cognitive work to the AI—and struggled to accurately recall or quote their own AI-assisted work afterward.

The paradox: AI reduces short-term cognitive load while creating long-term cognitive fatigue, attention depletion, and diminished self-confidence in independent decision-making.

This isn't about AI being bad. It's about interaction patterns that outsource the wrong things. When you use AI as a crutch instead of a tool—when you let it do your thinking rather than augment your thinking—you're not saving mental energy. You're spending it in ways that don't build anything.

Structured prompting doesn't just save time. It keeps you engaged with your own work in ways that matter.

The Math That Should Terrify You

Let's do some back-of-napkin calculations. Nothing fancy. Just arithmetic.

If each poorly-structured interaction wastes 5-10 minutes on clarification cycles, and you have 20 AI interactions a day—not unreasonable for someone using it seriously—that's 2-3 hours of weekly waste. Over a year, that approaches 100-150 hours.

That's three to four full work weeks. Gone. Not to difficult tasks. Not to complex problems. To ping-pong.

Meanwhile, the people who've figured this out? Research shows workers using AI across 7+ task types save 5x more time than those using only 4 task types. Daily users report 92% productivity gains versus 58% for occasional users.

The gap compounds. Week by week. Month by month. The people who prompt well pull further ahead. The people who don't keep subsidizing their inefficiency with their most constrained resource: time.

What Actually Works

I'm not going to give you a 47-step framework for perfect prompting. Life's too short. But here's what I've seen work consistently across hundreds of sessions:

Front-load your context. Before you ask for anything, tell the AI who you are, what you're working on, and what constraints matter. Think of it like briefing a new contractor. They can't read your mind. Neither can the AI.

Specify your format. Want bullets? Say so. Want it under 200 words? Say so. Want a casual tone? Say so. The 30 seconds you spend clarifying format saves the 30 minutes you'd spend rewriting.

Give examples of what good looks like. "Write like this example" is worth a thousand words of explanation. Show, don't tell. The AI will pattern-match to what you actually want.

Iterate with direction, not hope. "Try again" is worthless. "Make it more conversational, remove the bullet points, and cut the length by half" gives the AI something to work with. Vague corrections produce vague improvements.

The Real Investment

Here's what kills me about all this: the fix isn't hard. We're not talking about learning to code or getting a certification. We're talking about 10-15 minutes of intentional practice.

That's it. A few minutes learning to structure your requests, and you unlock dramatically higher returns on every future AI interaction. The alternative—accepting the ping-pong pattern as inevitable—means subsidizing inefficiency with your time. Forever.

And honestly? The people selling you AI tools aren't super motivated to tell you this. Your inefficiency is their engagement metric. Your wasted tokens are their revenue. The worse you are at prompting, the more dependent you become on the next upgrade, the next feature, the next model that will finally make things "easy."

But the gains don't come from better models. They come from better prompts. Half of them, anyway.

Might be worth spending those 10 minutes.

The tool ain't broke. How you use it is.

Ten minutes. That's the investment. The alternative is paying the ping-pong tax for the rest of your AI-assisted career.

Your call.